It takes two to four hours* to conduct a user interview on a video call.

The more interviews you run, the higher confidence you have in the results. However, unless you have a large team of researchers, it’s not possible to run more than one session at a time. This means

*including the time to plan, schedule, conduct and summarise each session.

Problem

It’s expensive

A senior researcher costs upwards of £450-£600 per day. For a 6 week project, you’re looking at ~£27,000 between you and the answers to your questions.

It’s not a good use of their time

Teams are already competing for hours in the day. Losing one or more people to conducting interviews can delay other work.

It takes too long

Each project takes weeks to complete. Planning, scheduling, completing and then analysing the results of teach session

I founded AnswerTime alone; designing, coding and launching the functional prototype myself.

Since presenting the concept at a meet up, I have now formed a partnership with the team at Mindware AI to build and launch the next version of the product.

Strategy

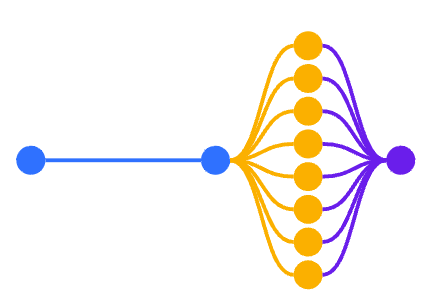

My strategy is to lower the cost of research by maximising the span of control for researchers *, enabling them to minimise the time it takes to get the answer(s) they need.

Current model

The length of time it takes to conduct primary research compounds with each additional interview session that you want to run, on top of the time it takes to prepare an coordinate the project.

The AnswerTime model

Eliminates hiring and parallelises the user sessions and makes them asynchronous. Conversations are vectorised and insights are summarised at any time, instantly.

A functional prototype

“It’s a cross between a survey and an interview”

If you’ve read this far, I really implore you to go to the website and try it for yourself. However, if you’re short on time - here’s a summary of how it works:

Prepare a new project by letting the AI know who it will be speaking to and providing the opening question (the GPT conducts the rest of the interview by responding to the participant’s responses)

Share a link (each question has a unique url) with all participants and let them respond to you asynchronously

The full transcript from every conversation is available to view and download

The conversations can by summarised by the same AI, which will highlight the key themes and back them up with direct quotes

I built this app on the following stack:

Chat app was built by adapting a ChatGPT interface via Vercel

Storage via Supabase; where all the conversations end up

Website front and back end (including user management and Stripe integration) is built in Bubble

Watch this video demo

Results

Daily cost to conduct user interviews is 100x lower *

* Based on conducting 5 interviews in one day, along with the average cost via AnswerTime’s pay-as-you-go model.

The time taken to complete interviews is completely eliminated

All that teams need to do is prepare the survey and review the results.

I’m still testing the app in applied research situations and currently building the next iteration. So far I have learned:

Participants will happily chat to an AI bot for longer than I expected

Trying to learn to code by building an app and using ChatGPT has been an incredibly frustrating and rewarding experience

“Build it and they will come” is, as most people espouse, complete shite - early-stage user acquisition is hard work